Raw Data Collection and Preparation

The project began with gathering raw data and performing an initial data cleansing to make the data easier to work with.

This includes standardizing the data, converting columns to the correct types, and removing unnecessary information.

Clean data was crucial for accurate analysis and reliable results.

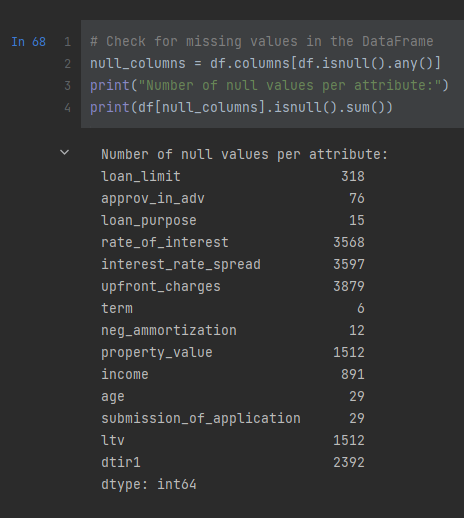

To address several missing values in the dataset, a method called KNNImputer is used for numerical data.

This method estimates missing values based on similar entries, providing a more realistic and accurate way to handle gaps in the data.